22 Ensemble prediction with multiple models

Configurations to run this chapter

# load "pysits" library

from pysits import *

from pathlib import Path

# set tempdir if it does not exist

tempdir_py = Path.home() / "sitsbook/tempdir/Python/cl_ensembleprediction"

tempdir_py.mkdir(parents=True, exist_ok=True)22.1 Introduction to ensemble prediction

Ensemble prediction is a powerful technique for combining predictions from multiple models to produce more accurate and robust predictions. Errors of individual models cancel out or are reduced when combined with the predictions of other models. As a result, ensemble predictions can lead to better overall accuracy and reduce the risk of overfitting. This can be especially useful when working with complex or uncertain data. By combining the predictions of multiple models, users can identify which features or factors are most important for making accurate predictions. When using ensemble methods, choosing diverse models with different sources of error is essential to ensure that the ensemble predictions are more precise and robust.

The sits package provides sits_combine_predictions() to estimate ensemble predictions using probability cubes produced by sits_classify() and optionally post-processed with sits_smooth(). There are two ways to make ensemble predictions from multiple models:

Averaging: In this approach, the predictions of each model are averaged to produce the final prediction. This method works well when the models have similar accuracy and errors.

Uncertainty: Predictions from different models are compared in terms of their uncertainties on a pixel-by-pixel basis; predictions with lower uncertainty are chosen as being more likely to be valid.

In what follows, we will use the same sample dataset and data cube used in Chapter Image classification in data cubes to illustrate how to produce an ensemble prediction. The dataset samples_deforestation_rondonia consists of 6,007 samples collected from Sentinel-2 images covering the state of Rondonia. Each time series contains values from all Sentinel-2/2A spectral bands for year 2022 in 16-day intervals. The data cube is a subset of the Sentinel-2 tile “20LMR” which contains all spectral bands, plus spectral indices “NVDI”, “EVI” and “NBR” for the year 2022.

22.2 Recovering the data cube

The first step is to recover the data cube which is available in the sitsdata package, and to select only the spectral bands.

# Files are available in a local directory

data_dir <- system.file("extdata/Rondonia-20LMR/", package = "sitsdata")

# Read data cube

ro_cube_20LMR <- sits_cube(

source = "MPC",

collection = "SENTINEL-2-L2A",

data_dir = data_dir

)

# reduce the number of bands

ro_cube_20LMR <- sits_select(

data = ro_cube_20LMR,

bands = c(

"B02", "B03", "B04", "B05",

"B06", "B07", "B08", "B11",

"B12", "B8A"

)

)

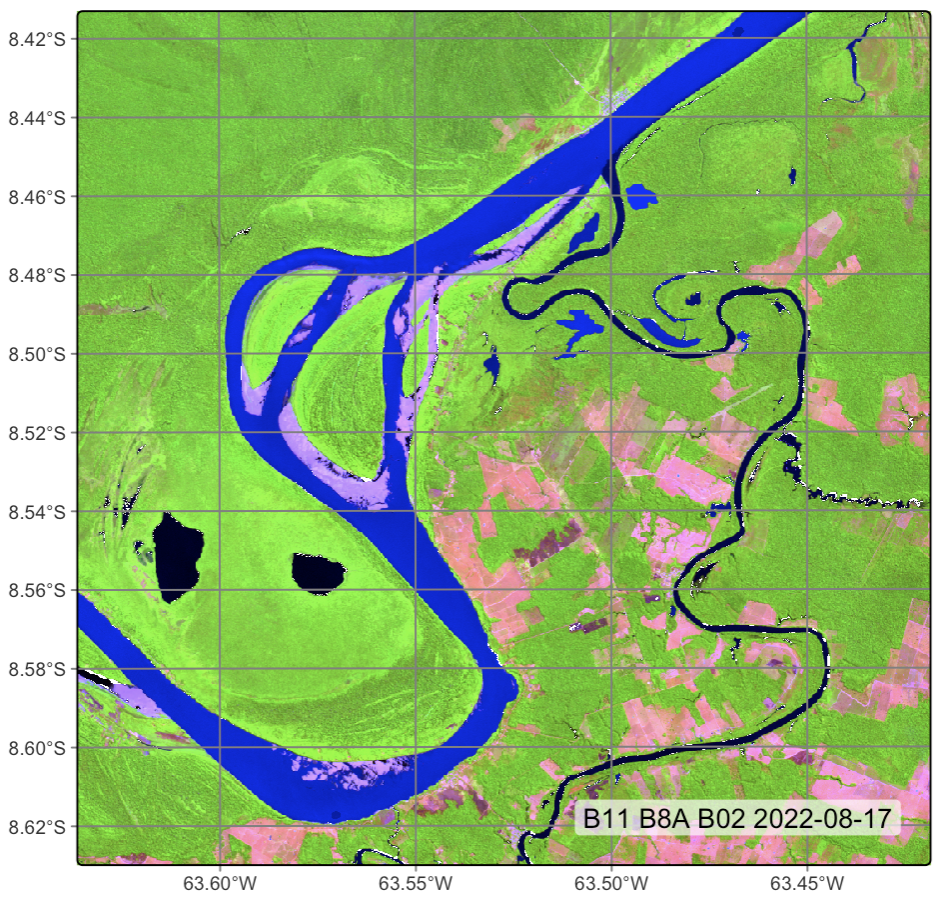

# plot one time step of the cube

plot(

ro_cube_20LMR,

blue = "B02",

green = "B8A",

red = "B11",

date = "2022-08-17"

)# Files are available in a local directory

data_dir = r_package_dir("extdata/Rondonia-20LMR/", package = "sitsdata")

# Read data cube

ro_cube_20LMR = sits_cube(

source = "MPC",

collection = "SENTINEL-2-L2A",

data_dir = data_dir

)

# reduce the number of bands

ro_cube_20LMR = sits_select(

data = ro_cube_20LMR,

bands = (

"B02", "B03", "B04", "B05",

"B06", "B07", "B08", "B11",

"B12", "B8A"

)

)

# plot one time step of the cube

plot(

ro_cube_20LMR,

blue = "B02",

green = "B8A",

red = "B11",

date = "2022-08-17"

)

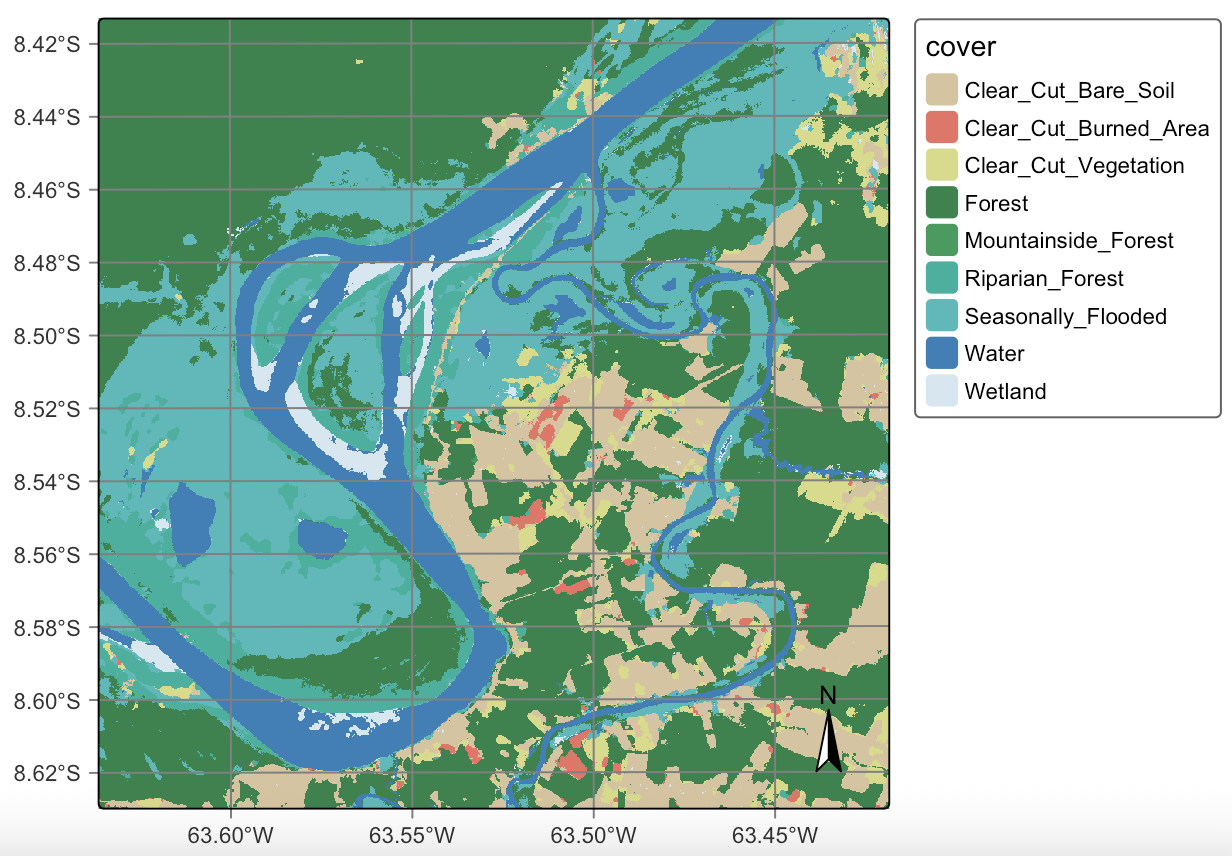

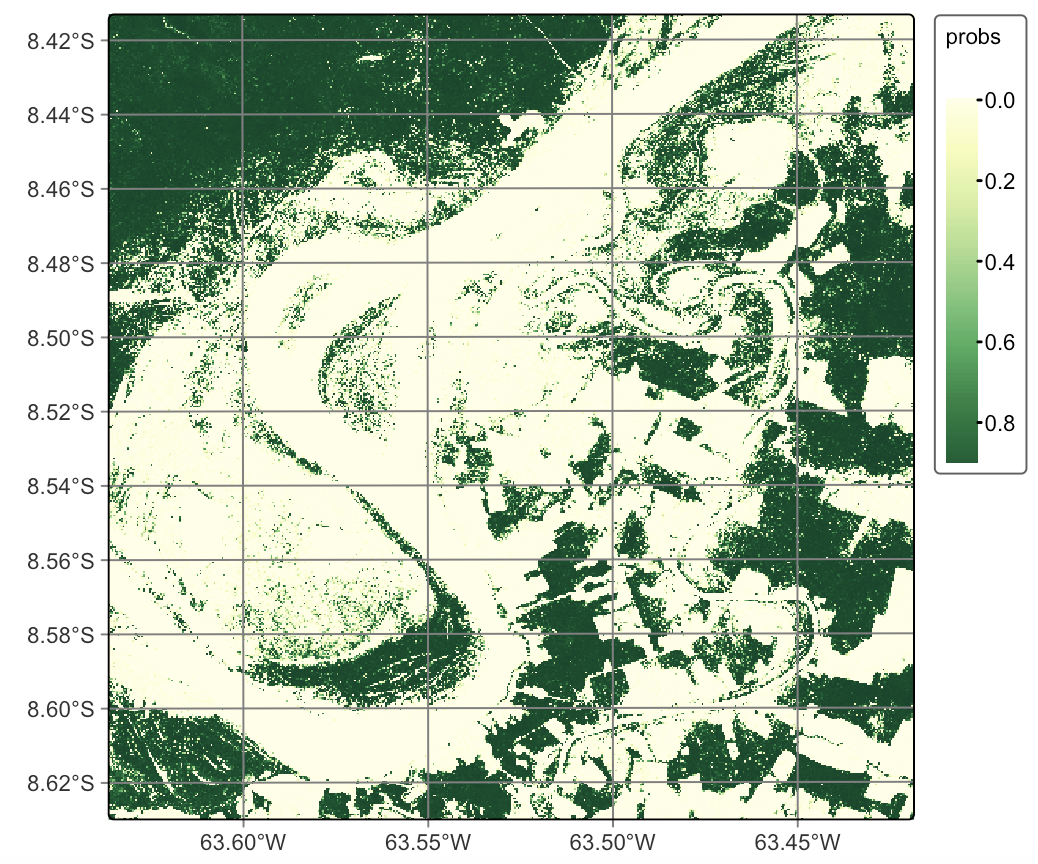

22.3 Classification using Random Forest

We will train three models: Random Forest (RF), Light Temporal Attention Encoder (LTAE), and Temporal Convolution Neural Networks (TempCNN), classify the cube with them, and then combine their results. The example uses all spectral bands. We first run the RF classification.

Probabilities

# train a Random Forest model

rfor_model <- sits_train(samples_deforestation_rondonia, sits_rfor())

# classify the data cube

ro_cube_20LMR_rfor_probs <- sits_classify(

ro_cube_20LMR,

rfor_model,

output_dir = tempdir_r,

multicores = 6,

memsize = 24,

version = "rfor"

)

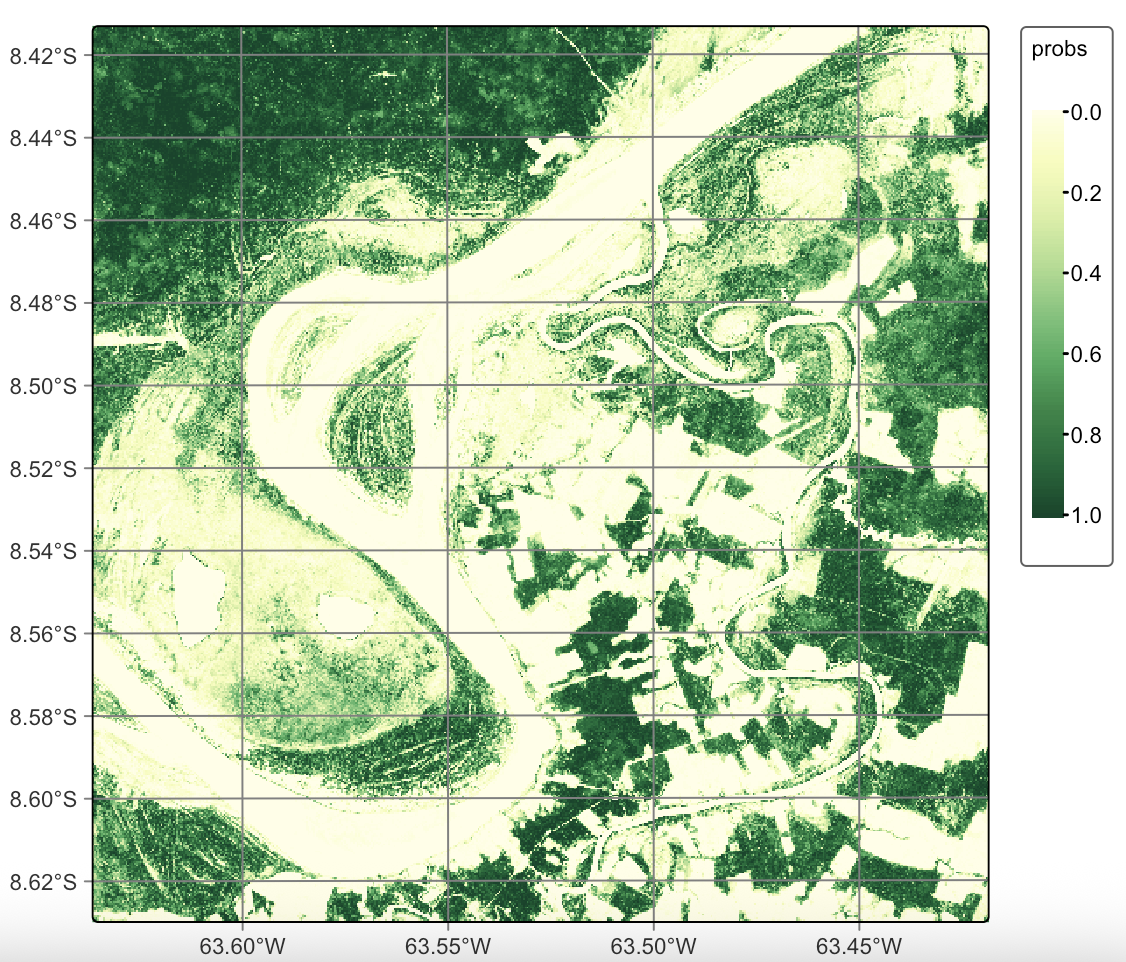

# plot the probabilities for class Forest

plot(ro_cube_20LMR_rfor_probs, labels = "Forest")# load data

samples_deforestation_rondonia = load_samples(

name = "samples_deforestation_rondonia",

package = "sitsdata"

)

# train a Random Forest model

rfor_model = sits_train(samples_deforestation_rondonia, sits_rfor())

# classify the data cube

ro_cube_20LMR_rfor_probs = sits_classify(

ro_cube_20LMR,

rfor_model,

output_dir = tempdir_py,

multicores = 6,

memsize = 24,

version = "rfor"

)

# plot the probabilities for class Forest

plot(ro_cube_20LMR_rfor_probs, labels = "Forest")

Variance

ro_cube_20LMR_rfor_variance <- sits_variance(

ro_cube_20LMR_rfor_probs,

window_size = 9,

output_dir = tempdir_r,

multicores = 6,

memsize = 24,

version = "rfor"

)

summary(ro_cube_20LMR_rfor_variance) Clear_Cut_Bare_Soil Clear_Cut_Burned_Area Clear_Cut_Vegetation Forest

75% 4.62 4.95 0.420 1.0800

80% 5.17 5.38 0.510 1.4900

85% 5.57 5.70 0.670 2.2115

90% 5.93 6.03 1.261 4.4000

95% 6.64 6.69 5.290 7.1000

100% 17.31 13.45 13.950 27.2900

Mountainside_Forest Riparian_Forest Seasonally_Flooded Water Wetland

75% 0.690 0.9100 0.37 0.5300 4.58

80% 1.960 1.3800 0.47 0.5300 5.21

85% 3.830 2.5815 0.61 1.1645 5.60

90% 5.241 4.3600 0.95 2.3800 5.96

95% 6.220 6.2000 2.19 7.4215 6.45

100% 11.900 31.3200 18.27 42.9700 23.91ro_cube_20LMR_rfor_variance = sits_variance(

ro_cube_20LMR_rfor_probs,

window_size = 9,

output_dir = tempdir_py,

multicores = 6,

memsize = 24,

version = "rfor"

)

summary(ro_cube_20LMR_rfor_variance) Clear_Cut_Bare_Soil Clear_Cut_Burned_Area Clear_Cut_Vegetation Forest Mountainside_Forest Riparian_Forest Seasonally_Flooded Water Wetland

75% 4.62 4.95 0.420 1.0800 0.690 0.9100 0.37 0.5300 4.58

80% 5.17 5.38 0.510 1.4900 1.960 1.3800 0.47 0.5300 5.21

85% 5.57 5.70 0.670 2.2115 3.830 2.5815 0.61 1.1645 5.60

90% 5.93 6.03 1.261 4.4000 5.241 4.3600 0.95 2.3800 5.96

95% 6.64 6.69 5.290 7.1000 6.220 6.2000 2.19 7.4215 6.45

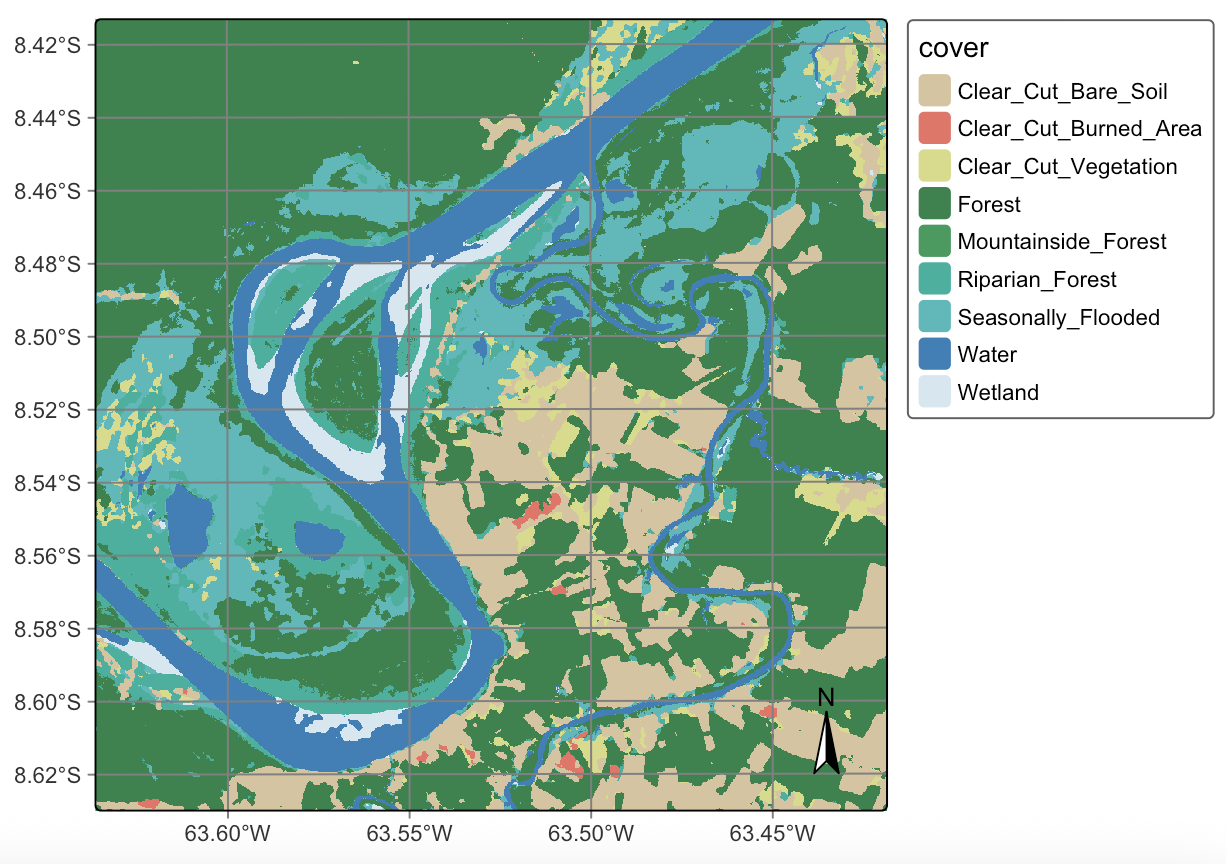

100% 17.31 13.45 13.950 27.2900 11.900 31.3200 18.27 42.9700 23.91Based on the variance values, we apply the smoothness hyperparameter according to the recommendations proposed before. We choose values of \(\sigma^2_{k}\) that reflect our prior expectation of the spatial patterns of each class. For classes Clear_Cut_Vegetation and Clear_Cut_Burned_Area, to produce denser spatial clusters and remove “salt-and-pepper” outliers, we take \(\sigma^2_{k}\) values in 95%-100% range. In the case of the most frequent classes Forest and Clear_Cut_Bare_Soil we want to preserve their original spatial shapes as much as possible; the same logic applies to less frequent classes Water and Wetland. For this reason, we set \(\sigma^2_{k}\) values in the 75%-80% range for these classes. The class spatial patterns correspond to our prior expectations.

ro_cube_20LMR_rfor_bayes <- sits_smooth(

ro_cube_20LMR_rfor_probs,

output_dir = tempdir_r,

smoothness = c(

"Clear_Cut_Bare_Soil" = 5.25,

"Clear_Cut_Burned_Area" = 15.0,

"Clear_Cut_Vegetation" = 12.0,

"Forest" = 1.8,

"Mountainside_Forest" = 6.5,

"Riparian_Forest" = 6.0,

"Seasonally_Flooded" = 3.5,

"Water" = 1.5,

"Wetland" = 5.5),

multicores = 6,

memsize = 24,

version = "rfor"

)

ro_cube_20LMR_rfor_class <- sits_label_classification(

ro_cube_20LMR_rfor_bayes,

output_dir = tempdir_r,

multicores = 6,

memsize = 24,

version = "rfor"

)

plot(ro_cube_20LMR_rfor_class,

legend_text_size = 0.7, legend_position = "outside")ro_cube_20LMR_rfor_bayes = sits_smooth(

ro_cube_20LMR_rfor_probs,

output_dir = tempdir_py,

smoothness = dict(

Clear_Cut_Bare_Soil = 5.25,

Clear_Cut_Burned_Area = 15.0,

Clear_Cut_Vegetation = 12.0,

Forest = 1.8,

Mountainside_Forest = 6.5,

Riparian_Forest = 6.0,

Seasonally_Flooded = 3.5,

Water = 1.5,

Wetland = 5.5),

multicores = 6,

memsize = 24,

version = "rfor"

)

ro_cube_20LMR_rfor_class = sits_label_classification(

ro_cube_20LMR_rfor_bayes,

output_dir = tempdir_py,

multicores = 6,

memsize = 24,

version = "rfor"

)

plot(ro_cube_20LMR_rfor_class,

legend_text_size = 0.7, legend_position = "outside")

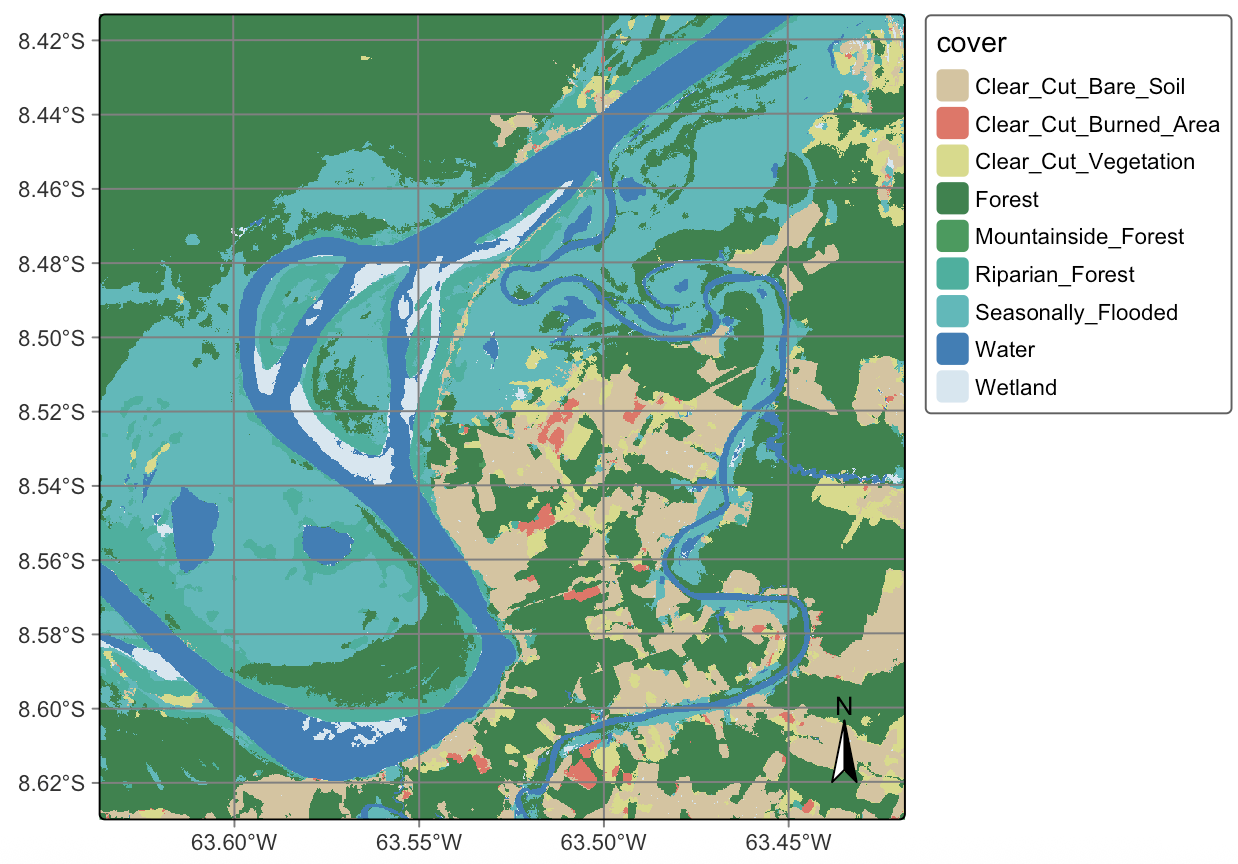

22.4 Classification using Temporal Convolution Neural Networks (TempCNN)

The next step is to classify the same area using a tempCNN algorithm, as shown below.

Probabilities

# train a tempcnn model

tcnn_model <- sits_train(samples_deforestation_rondonia,

sits_tempcnn())

# classify the data cube

ro_cube_20LMR_tcnn_probs <- sits_classify(

ro_cube_20LMR,

tcnn_model,

output_dir = tempdir_r,

multicores = 2,

memsize = 8,

gpu_memory = 8,

version = "tcnn"

)

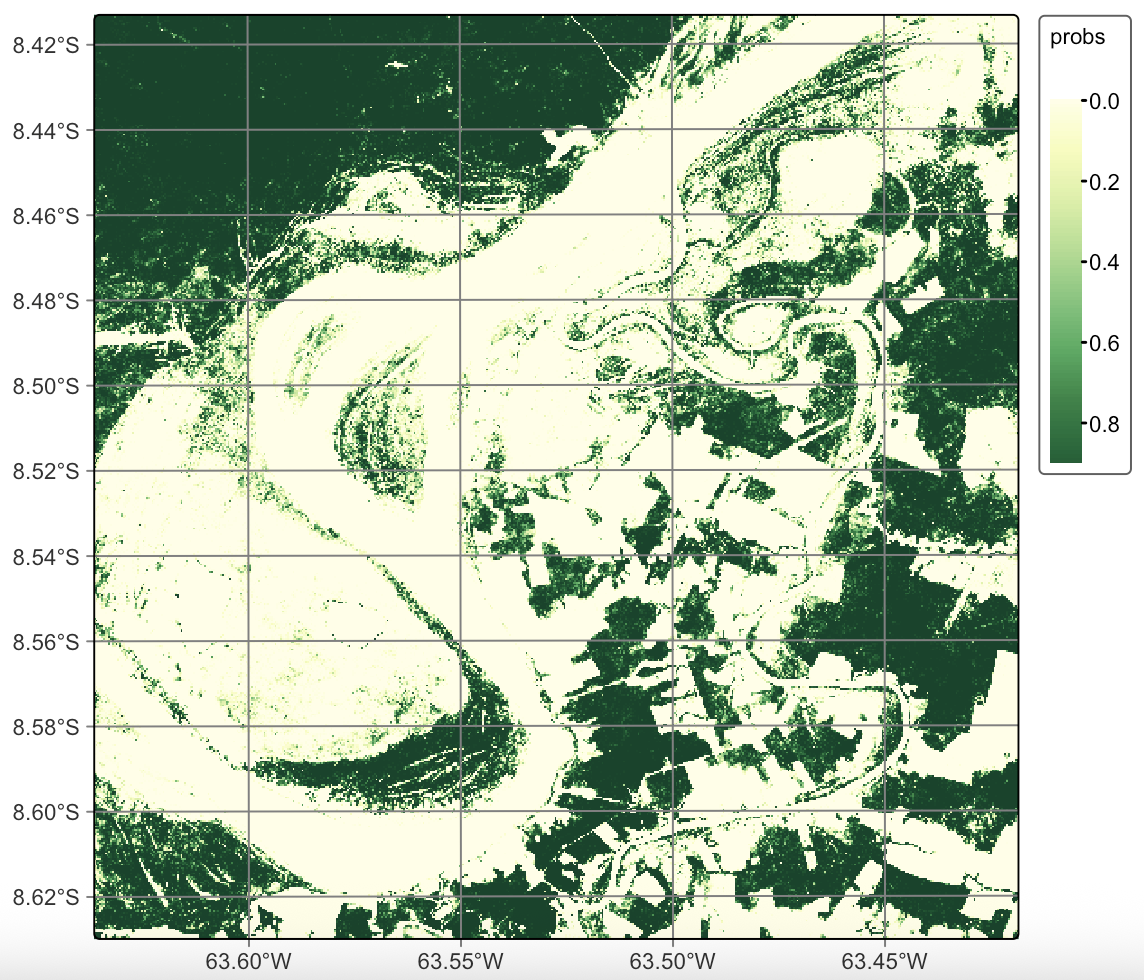

# plot the probabilities for class Forest

plot(ro_cube_20LMR_tcnn_probs, labels = "Forest")# train a tempcnn model

tcnn_model = sits_train(samples_deforestation_rondonia,

sits_tempcnn())

# classify the data cube

ro_cube_20LMR_tcnn_probs = sits_classify(

ro_cube_20LMR,

tcnn_model,

output_dir = tempdir_py,

multicores = 2,

memsize = 8,

gpu_memory = 8,

version = "tcnn"

)

# plot the probabilities for class Forest

plot(ro_cube_20LMR_tcnn_probs, labels = "Forest")

Variance

ro_cube_20LMR_tcnn_variance <- sits_variance(

ro_cube_20LMR_tcnn_probs,

window_size = 9,

output_dir = tempdir_r,

multicores = 6,

memsize = 24,

version = "tcnn"

)

summary(ro_cube_20LMR_tcnn_variance) Clear_Cut_Bare_Soil Clear_Cut_Burned_Area Clear_Cut_Vegetation Forest

75% 1.1900 1.150 2.17 2.390

80% 1.5200 1.392 2.54 3.050

85% 2.0800 1.730 3.00 4.030

90% 3.3800 2.370 3.80 5.821

95% 6.9225 3.820 5.35 10.560

100% 36.8900 22.630 32.26 44.410

Mountainside_Forest Riparian_Forest Seasonally_Flooded Water Wetland

75% 1.40 2.600 2.5225 0.550 1.4400

80% 1.63 3.122 3.1000 0.680 1.7800

85% 1.90 3.910 4.1115 0.900 2.2600

90% 2.32 5.300 5.9500 1.390 3.0400

95% 3.16 8.440 9.6105 9.544 4.7205

100% 11.21 56.980 41.7900 53.920 50.7400ro_cube_20LMR_tcnn_variance = sits_variance(

ro_cube_20LMR_tcnn_probs,

window_size = 9,

output_dir = tempdir_py,

multicores = 6,

memsize = 24,

version = "tcnn"

)

summary(ro_cube_20LMR_tcnn_variance) Clear_Cut_Bare_Soil Clear_Cut_Burned_Area Clear_Cut_Vegetation Forest Mountainside_Forest Riparian_Forest Seasonally_Flooded Water Wetland

75% 1.1900 1.150 2.17 2.390 1.40 2.600 2.5225 0.550 1.4400

80% 1.5200 1.392 2.54 3.050 1.63 3.122 3.1000 0.680 1.7800

85% 2.0800 1.730 3.00 4.030 1.90 3.910 4.1115 0.900 2.2600

90% 3.3800 2.370 3.80 5.821 2.32 5.300 5.9500 1.390 3.0400

95% 6.9225 3.820 5.35 10.560 3.16 8.440 9.6105 9.544 4.7205

100% 36.8900 22.630 32.26 44.410 11.21 56.980 41.7900 53.920 50.7400Smooth and label classification

ro_cube_20LMR_tcnn_bayes <- sits_smooth(

ro_cube_20LMR_tcnn_probs,

output_dir = tempdir_r,

window_size = 11,

smoothness = c(

"Clear_Cut_Bare_Soil" = 1.5,

"Clear_Cut_Burned_Area" = 20.0,

"Clear_Cut_Vegetation" = 25.0,

"Forest" = 4.0,

"Mountainside_Forest" = 3.0,

"Riparian_Forest" = 40.0,

"Seasonally_Flooded" = 30.0,

"Water" = 1.0,

"Wetland" = 2.0),

multicores = 2,

memsize = 6,

version = "tcnn"

)

ro_cube_20LMR_tcnn_class <- sits_label_classification(

ro_cube_20LMR_tcnn_bayes,

output_dir = tempdir_r,

multicores = 2,

memsize = 6,

version = "tcnn"

)

plot(ro_cube_20LMR_tcnn_class,

legend_text_size = 0.7, legend_position = "outside")ro_cube_20LMR_tcnn_bayes = sits_smooth(

ro_cube_20LMR_tcnn_probs,

output_dir = tempdir_py,

window_size = 11,

smoothness = dict(

Clear_Cut_Bare_Soil = 1.5,

Clear_Cut_Burned_Area = 20.0,

Clear_Cut_Vegetation = 25.0,

Forest = 4.0,

Mountainside_Forest = 3.0,

Riparian_Forest = 40.0,

Seasonally_Flooded = 30.0,

Water = 1.0,

Wetland = 2.0),

multicores = 2,

memsize = 6,

version = "tcnn"

)

ro_cube_20LMR_tcnn_class = sits_label_classification(

ro_cube_20LMR_tcnn_bayes,

output_dir = tempdir_py,

multicores = 2,

memsize = 6,

version = "tcnn"

)

plot(ro_cube_20LMR_tcnn_class,

legend_text_size = 0.7, legend_position = "outside")

22.5 Classification using Lightweight Temporal Attention Encoder (LTAE)

The third model is the Light Temporal Attention Encoder (LTAE), which has been discussed in the Machine Learning chapter.

Probabilities

# train a tempcnn model

ltae_model <- sits_train(samples_deforestation_rondonia, sits_lighttae())

# classify the data cube

ro_cube_20LMR_ltae_probs <- sits_classify(

ro_cube_20LMR,

ltae_model,

output_dir = tempdir_r,

multicores = 2,

memsize = 8,

gpu_memory = 8,

version = "ltae"

)

# plot the probabilities for class Forest

plot(ro_cube_20LMR_ltae_probs, labels = "Forest")# train a tempcnn model

ltae_model = sits_train(samples_deforestation_rondonia, sits_lighttae())

# classify the data cube

ro_cube_20LMR_ltae_probs = sits_classify(

ro_cube_20LMR,

ltae_model,

output_dir = tempdir_py,

multicores = 2,

memsize = 8,

gpu_memory = 8,

version = "ltae"

)

# plot the probabilities for class Forest

plot(ro_cube_20LMR_ltae_probs, labels = "Forest")

We then compute the variance of the probability cube produced by the LTAE algorithm.

Variance

ro_cube_20LMR_ltae_variance <- sits_variance(

ro_cube_20LMR_ltae_probs,

window_size = 9,

output_dir = tempdir_r,

multicores = 6,

memsize = 24,

version = "ltae"

)

summary(ro_cube_20LMR_ltae_variance) Clear_Cut_Bare_Soil Clear_Cut_Burned_Area Clear_Cut_Vegetation Forest

75% 0.7300 0.51 2.2800 1.97

80% 1.1100 0.70 2.8320 2.86

85% 1.8000 1.00 3.5300 3.84

90% 3.1710 1.48 4.4310 5.07

95% 5.7205 2.50 5.7605 7.11

100% 29.6300 13.77 14.9000 24.17

Mountainside_Forest Riparian_Forest Seasonally_Flooded Water Wetland

75% 0.42 1.010 3.6425 0.3000 0.660

80% 0.52 1.560 4.3800 0.3400 1.042

85% 0.67 2.470 5.2315 0.4000 1.880

90% 1.00 3.871 6.5210 0.5400 3.340

95% 1.68 6.080 8.7400 8.1905 6.360

100% 12.74 24.430 31.3100 44.8400 43.080ro_cube_20LMR_ltae_variance = sits_variance(

ro_cube_20LMR_ltae_probs,

window_size = 9,

output_dir = tempdir_py,

multicores = 6,

memsize = 24,

version = "ltae"

)

summary(ro_cube_20LMR_ltae_variance) Clear_Cut_Bare_Soil Clear_Cut_Burned_Area Clear_Cut_Vegetation Forest Mountainside_Forest Riparian_Forest Seasonally_Flooded Water Wetland

75% 0.7300 0.51 2.2800 1.97 0.42 1.010 3.6425 0.3000 0.660

80% 1.1100 0.70 2.8320 2.86 0.52 1.560 4.3800 0.3400 1.042

85% 1.8000 1.00 3.5300 3.84 0.67 2.470 5.2315 0.4000 1.880

90% 3.1710 1.48 4.4310 5.07 1.00 3.871 6.5210 0.5400 3.340

95% 5.7205 2.50 5.7605 7.11 1.68 6.080 8.7400 8.1905 6.360

100% 29.6300 13.77 14.9000 24.17 12.74 24.430 31.3100 44.8400 43.080We use the same rationale for selecting the smoothness parameter for the Bayesian smoothing operation as in the cases above.

Smooth and label classification

ro_cube_20LMR_ltae_bayes <- sits_smooth(

ro_cube_20LMR_tcnn_probs,

output_dir = tempdir_r,

window_size = 11,

smoothness = c(

"Clear_Cut_Bare_Soil" = 1.2,

"Clear_Cut_Burned_Area" = 10.0,

"Clear_Cut_Vegetation" = 15.0,

"Forest" = 4.0,

"Mountainside_Forest" = 8.0,

"Riparian_Forest" = 25.0,

"Seasonally_Flooded" = 30.0,

"Water" = 0.3,

"Wetland" = 1.8),

multicores = 2,

memsize = 6,

version = "ltae"

)

# generate the classified map

ro_cube_20LMR_ltae_class <- sits_label_classification(

ro_cube_20LMR_ltae_bayes,

output_dir = tempdir_r,

multicores = 2,

memsize = 6,

version = "ltae"

)

# plot the classified map

plot(ro_cube_20LMR_ltae_class,

legend_text_size = 0.7, legend_position = "outside")ro_cube_20LMR_ltae_bayes = sits_smooth(

ro_cube_20LMR_tcnn_probs,

output_dir = tempdir_py,

window_size = 11,

smoothness = dict(

Clear_Cut_Bare_Soil = 1.2,

Clear_Cut_Burned_Area = 10.0,

Clear_Cut_Vegetation = 15.0,

Forest = 4.0,

Mountainside_Forest = 8.0,

Riparian_Forest = 25.0,

Seasonally_Flooded = 30.0,

Water = 0.3,

Wetland = 1.8),

multicores = 2,

memsize = 6,

version = "ltae"

)

# generate the classified map

ro_cube_20LMR_ltae_class = sits_label_classification(

ro_cube_20LMR_ltae_bayes,

output_dir = tempdir_py,

multicores = 2,

memsize = 6,

version = "ltae"

)

# plot the classified map

plot(ro_cube_20LMR_ltae_class,

legend_text_size = 0.7, legend_position = "outside")

22.6 Differences between model results

To understand the differences between the results, it is useful to compare the resulting class areas produced by the different algorithms, expressed in \(km^2\).

# get the summary of the map produced by RF

sum1 <- summary(ro_cube_20LMR_rfor_class) |>

dplyr::select("class", "area_km2")

colnames(sum1) <- c("class", "rfor")

# get the summary of the map produced by TCNN

sum2 <- summary(ro_cube_20LMR_tcnn_class) |>

dplyr::select("class", "area_km2")

colnames(sum2) <- c("class", "tcnn")

# get the summary of the map produced by LTAE

sum3 <- summary(ro_cube_20LMR_ltae_class) |>

dplyr::select("class", "area_km2")

colnames(sum3) <- c("class", "ltae")

# compare class areas of maps produced by the three models

pred_class_areas <- dplyr::inner_join(sum1, sum2, by = "class") |>

dplyr::inner_join(sum3, by = "class")

pred_class_areas# A tibble: 9 × 4

class rfor tcnn ltae

<chr> <dbl> <dbl> <dbl>

1 Clear_Cut_Bare_Soil 81 67 66

2 Clear_Cut_Burned_Area 1.6 3.8 3.9

3 Clear_Cut_Vegetation 19 22 22

4 Forest 280 240 240

5 Mountainside_Forest 0.0008 0.064 0.053

6 Riparian_Forest 46 39 38

7 Seasonally_Flooded 72 130 130

8 Water 63 68 67

9 Wetland 13 10 11 import pandas as pd

# Get classification summaries

sum1 = summary(ro_cube_20LMR_rfor_class)

sum2 = summary(ro_cube_20LMR_tcnn_class)

sum3 = summary(ro_cube_20LMR_ltae_class)

# Get only ``class`` and ``area_km2`` columns

sum1 = sum1[["class", "area_km2"]]

sum2 = sum2[["class", "area_km2"]]

sum3 = sum3[["class", "area_km2"]]

# Rename columns

sum1.columns = ["class", "rfor"]

sum2.columns = ["class", "tcnn"]

sum3.columns = ["class", "ltae"]

# Merge columns

pred_class_areas = pd.merge(sum1, sum2, on = "class", how = "inner")

pred_class_areas = pd.merge(pred_class_areas, sum3, on = "class", how = "inner")

pred_class_areas class rfor tcnn ltae

1 Clear_Cut_Bare_Soil 81.0000 67.000 66.000

2 Clear_Cut_Burned_Area 1.6000 3.800 3.900

3 Clear_Cut_Vegetation 19.0000 22.000 22.000

4 Forest 280.0000 240.000 240.000

5 Mountainside_Forest 0.0008 0.064 0.053

6 Riparian_Forest 46.0000 39.000 38.000

7 Seasonally_Flooded 72.0000 130.000 130.000

8 Water 63.0000 68.000 67.000

9 Wetland 13.0000 10.000 11.000The study area presents many challenges for land classification, given the presence of wetlands, riparian forests and seasonally-flooded areas. The results show the algorithms obtain quite different results, since each model has different sensitivities. The RF method is biased towards the most frequent classes, especially for Clear_Cut_Bare_Soil and Forest. The area estimated by RF for class Clear_Cut_Burned_Area is the smallest of the three models. Most pixels assigned by LTAE and TCNN as burned areas are assigned by RF as being areas of bare soil. The RF algorithm tends to be more conservative. The reason is because RF decision-making uses values from single attributes (values of a single band in a given time instance), while LTAE and TCNN consider the relations between instances of the time series. Since the RF model is sensitive to the response of images at the end of the period, it tends to focus on values that indicate the presence of forests and bare soils during the dry season, which peaks in August. The LTAE and TCNN models are more balanced to the overall separation of classes in the entire attribute space, and produces larger estimates of riparian and seasonally flooded forest than the other methods. In contrast, both LTAE and TCNN make more mistakes than RF in including flooded areas in the center-left part of the image on the left side of the rives as Clear_Cut_Vegetation when the right label would be riparian or flooded forests.

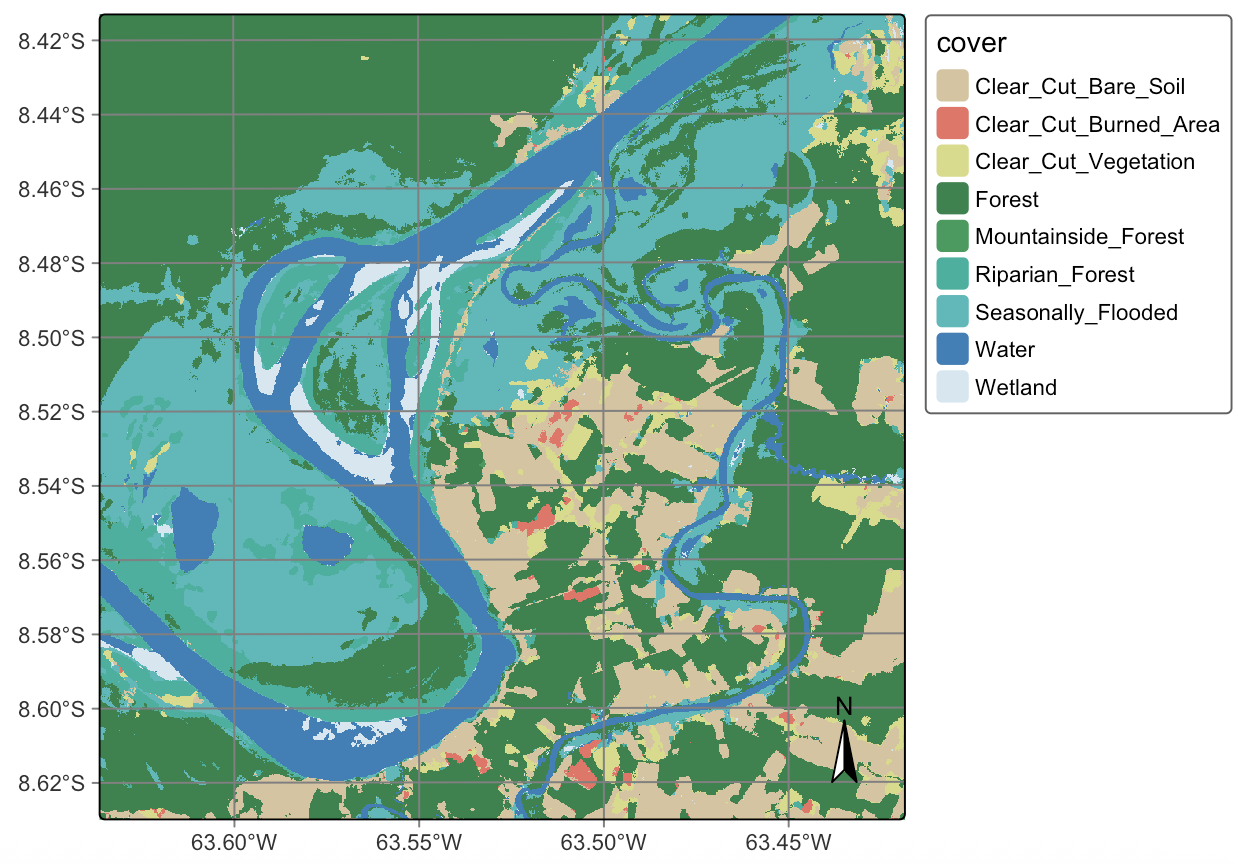

22.7 Combining the different predictions

Given the differences and complementaries between the three predicted outcomes, combining them using sits_combine_predictions() is useful. This function takes the following arguments: (a) cubes, a list of the cubes to be combined. These cubes should be probability cubes generated by which optionally may have been smoothened; (b) type, which indicates how to combine the probability maps. The options are average, which performs a weighted mean of the probabilities, and uncertainty, which uses the uncertainty cubes to combine the predictions; (c) weights, a vector of weights to be used to combine the predictions when average is selected; (d) uncertainty_cubes, a list of uncertainty cubes associated to the predictions; (e) multicores, number of cores to be used; (f) memsize, RAM used in the classification; (g) output_dir, the directory where the classified raster files will be written.

# Combine the three predictions by taking the average of the probabilities for each class

ro_cube_20LMR_average_probs <- sits_combine_predictions(

cubes = list(ro_cube_20LMR_tcnn_bayes,

ro_cube_20LMR_rfor_bayes,

ro_cube_20LMR_ltae_bayes),

type = "average",

version = "average-rfor-tcnn-ltae",

output_dir = tempdir_r,

weights = c(0.33, 0.34, 0.33),

memsize = 16,

multicores = 4

)

# Label the average probability cube

ro_cube_20LMR_average_class <- sits_label_classification(

cube = ro_cube_20LMR_average_probs,

output_dir = tempdir_r,

version = "average-rfor-tcnn-ltae",

memsize = 16,

multicores = 4

)

# plot the classified map

plot(ro_cube_20LMR_average_class,

legend_text_size = 0.7, legend_position = "outside")# Combine the three predictions by taking the average of the probabilities for each class

ro_cube_20LMR_average_probs = sits_combine_predictions(

cubes = [

ro_cube_20LMR_tcnn_bayes,

ro_cube_20LMR_rfor_bayes,

ro_cube_20LMR_ltae_bayes

],

type = "average",

version = "average-rfor-tcnn-ltae",

output_dir = tempdir_py,

weights = (0.33, 0.34, 0.33),

memsize = 16,

multicores = 4

)

# Label the average probability cube

ro_cube_20LMR_average_class = sits_label_classification(

cube = ro_cube_20LMR_average_probs,

output_dir = tempdir_py,

version = "average-rfor-tcnn-ltae",

memsize = 16,

multicores = 4

)

# plot the classified map

plot(ro_cube_20LMR_average_class,

legend_text_size = 0.7, legend_position = "outside")

We can also consider the class areas produced by the ensemble combination and compare them to the original estimates, expressed in \(km^2\).

# get the summary of the map produced by LTAE

sum4 <- summary(ro_cube_20LMR_average_class) |>

dplyr::select("class", "area_km2")

colnames(sum4) <- c("class", "ave")

# compare class areas of non-smoothed and smoothed maps

pred_ave_rfor_tcnn_ltae <- dplyr::inner_join(sum1, sum2, by = "class") |>

dplyr::inner_join(sum3, by = "class") |>

dplyr::inner_join(sum4, by = "class")

pred_ave_rfor_tcnn_ltae# A tibble: 9 × 5

class rfor tcnn ltae ave

<chr> <dbl> <dbl> <dbl> <dbl>

1 Clear_Cut_Bare_Soil 81 67 66 70

2 Clear_Cut_Burned_Area 1.6 3.8 3.9 3.2

3 Clear_Cut_Vegetation 19 22 22 19

4 Forest 280 240 240 250

5 Mountainside_Forest 0.0008 0.064 0.053 0.038

6 Riparian_Forest 46 39 38 41

7 Seasonally_Flooded 72 130 130 120

8 Water 63 68 67 67

9 Wetland 13 10 11 11 # get the summary of the map produced by LTAE

sum4 = summary(ro_cube_20LMR_average_class)

sum4 = sum4[["class", "area_km2"]]

# Rename columns

sum4.columns = ["class", "ave"]

# compare class areas of non-smoothed and smoothed maps

pred_ave_rfor_tcnn_ltae = pd.merge(sum1, sum2, on = "class", how = "inner")

pred_ave_rfor_tcnn_ltae = pd.merge(pred_ave_rfor_tcnn_ltae, sum3, on = "class", how = "inner")

pred_ave_rfor_tcnn_ltae = pd.merge(pred_ave_rfor_tcnn_ltae, sum4, on = "class", how = "inner")

pred_ave_rfor_tcnn_ltae class rfor tcnn ltae ave

1 Clear_Cut_Bare_Soil 81.0000 67.000 66.000 70.000

2 Clear_Cut_Burned_Area 1.6000 3.800 3.900 3.200

3 Clear_Cut_Vegetation 19.0000 22.000 22.000 19.000

4 Forest 280.0000 240.000 240.000 250.000

5 Mountainside_Forest 0.0008 0.064 0.053 0.038

6 Riparian_Forest 46.0000 39.000 38.000 41.000

7 Seasonally_Flooded 72.0000 130.000 130.000 120.000

8 Water 63.0000 68.000 67.000 67.000

9 Wetland 13.0000 10.000 11.000 11.000As expected, the ensemble map combines information from the three models. Taking the RF model prediction as a base, there is a reduction in the areas of classes Clear_Cut_Bare_Soil and Forest, confirming the tendency of the RF model to overemphasize the most frequent classes. The LTAE and TempCNN models are more sensitive to class variations and capture time-varying classes such as Riparian_Forest and Clear_Cut_Burned_Area in more detail than the RF model. However, both TempCNN and LTAE tend to confuse the deforestation-related class Clear_Cut_Vegetation and the natural class Riparian_Forest more than the RF model. This effect is evident in the left bank of the Madeira river in the centre-left region of the image. Also, both the LTAE and TempCNN maps are more grainy and have more spatial variability than the RF map.

The average map provides a compromise between RF’s strong empahsis on the most frequent classes and the tendency of deep learning methods to produce outliers based on temporal relationship. The average map is less grainy and more spatially consistent than the LTAE and TempCNN maps, while introducing variability which is not present in the RF map.

22.8 Summary

This chapter shows the possibilities of ensemble prediction. There are many ways to get better results than those presented here. Increasing the number of spectral bands would improve the final accuracy. Also, Bayesian smoothing for deep learning models should not rely on default parameters; rather it needs to rely on variance analysis, increase the spatial window and provide more informed hyperparameters. In general, ensemble prediction should be consider in all situations where one is not satisfied with the results of individual models. Combining model output increses the reliability of the result and thus should be considered in all situations where similar classes are present.